MedMod: Multimodal Benchmark for Medical Prediction Tasks with Electronic Health Records and Chest X-Ray Scans

Jul 1, 2025· ,,,,·

0 min read

,,,,·

0 min read

Shaza Elsharief

Saeed A. Shurrab

Baraa Al Jorf

Leopoldo Julian Lechuga Lopez

Krzysztof J. Geras

Farah E. Shamout

Abstract

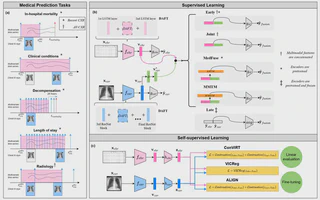

Multimodal machine learning provides a myriad of opportunities for developing models that integrate multiple modalities and mimic decision-making in the real-world, such as in medical settings. However, benchmarks involving multimodal medical data are scarce, especially routinely collected modalities such as Electronic Health Records (EHR) and Chest X-ray images (CXR). To contribute towards advancing multimodal learning in tackling real-world prediction tasks, we present MedMod, a multimodal medical benchmark with EHR and CXR using publicly available datasets MIMIC-IV and MIMIC-CXR, respectively. MedMod comprises five clinical prediction tasks: clinical conditions, in-hospital mortality, decompensation, length of stay, and radiological findings. We extensively evaluate several multimodal supervised learning models and self-supervised learning frameworks, making all of our code and models open-source.

Type

Publication

In Conference on Health, Inference, and Learning (CHIL)