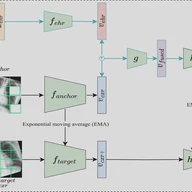

Self-supervised learning methods for medical images primarily rely on the imaging modality during pretraining. Although such approaches deliver promising results, they do not take advantage of the associated patient or scan information collected within Electronic Health Records (EHR). This study aims to develop a multimodal pretraining approach for chest radiographs that considers EHR data incorporation as an additional modality that during training. We propose to incorporate EHR data during self-supervised pretraining with a Masked Siamese Network (MSN) to enhance the quality of chest radiograph representations. We investigate three types of EHR data, including demographic, scan metadata, and inpatient stay information. We evaluate the multimodal MSN on three publicly available chest X-ray datasets, MIMIC-CXR, CheXpert, and NIH-14, using two vision transformer (ViT) backbones, specifically ViT-Tiny and ViT-Small. In assessing the quality of the representations through linear evaluation, our proposed method demonstrates significant improvement compared to vanilla MSN and state-of-the-art self-supervised learning baselines. In particular, our proposed method achieves an improvement of of 2% in the Area Under the Receiver Operating Characteristic Curve (AUROC) compared to vanilla MSN and 5% to 8% compared to other baselines, including uni-modal ones. Furthermore, our findings reveal that demographic features provide the most significant performance improvement. Our work highlights the potential of EHR-enhanced self-supervised pretraining for medical imaging and opens opportunities for future research to address limitations in existing representation learning methods for other medical imaging modalities, such as neuro-, ophthalmic, and sonar imaging.

Sep 28, 2024

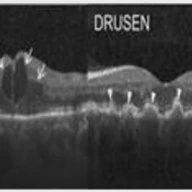

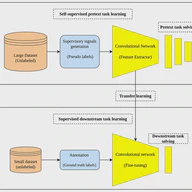

Retina disorders are among the common types of eye disease that occur due to several reasons such as aging, diabetes and premature born. Besides, Optical Coherence Tomography (OCT) is a medical imaging method that serves as a vehicle for capturing volumetric scans of the human eye retina for diagnoses purposes. This research compared two pretraining approaches including Self-Supervised Learning (SSL) and Transfer Learning (TL) to train ResNet34 neural architecture aiming at building computer aided diagnoses tool for retina disorders recognition. In addition, the research methodology employs convolutional auto-encoder model as a generative SSL pretraining method. The research efforts are implemented on a dataset that contains 109,309 retina OCT images with three medical conditions including Choroidal Neovascularization (CNV), Diabetic Macular Edema (DME), DRUSEN as well as NORMAL condition. The research outcomes showed better performance in terms of overall accuracy, sensitivity and specificity, namely, 95.2%, 95.2% and 98.4% respectively for SSL ResNet34 in comparison to scores of 90.7%, 90.7% and 96.9% respectively for TL ResNet34. In addition, SSL pretraining approach showed significant reduction in the number of epochs required for training in comparison to both TL pretraining as well as the previous research performed on the same dataset with comparable performance.

May 3, 2023

The scarcity of high-quality annotated medical imaging datasets is a major problem that collides with machine learning applications in the field of medical imaging analysis and impedes its advancement. Self-supervised learning is a recent training paradigm that enables learning robust representations without the need for human annotation which can be considered an effective solution for the scarcity of annotated medical data. This article reviews the state-of-the-art research directions in self-supervised learning approaches for image data with a concentration on their applications in the field of medical imaging analysis. The article covers a set of the most recent self-supervised learning methods from the computer vision field as they are applicable to the medical imaging analysis and categorize them as predictive, generative, and contrastive approaches. Moreover, the article covers 40 of the most recent research papers in the field of self-supervised learning in medical imaging analysis aiming at shedding the light on the recent innovation in the field. Finally, the article concludes with possible future research directions in the field.

Jun 28, 2022